61. Build the LeNet-5 Estimator#

61.1. Introduction#

In the previous experiments, we used four different methods,

such as the low-level

tf.nn

module and high-level

tf.keras

module of TensorFlow, as well as the

nn.Module

and

nn.Sequential

modules of PyTorch, to build the classic LeNet-5

convolutional neural network. In this challenge, we will use

the learned high-level API of TensorFlow Estimator to

reconstruct LeNet-5 and complete the training.

61.2. Key Points#

Usage of TensorFlow Estimator

LeNet-5 Convolutional Neural Network

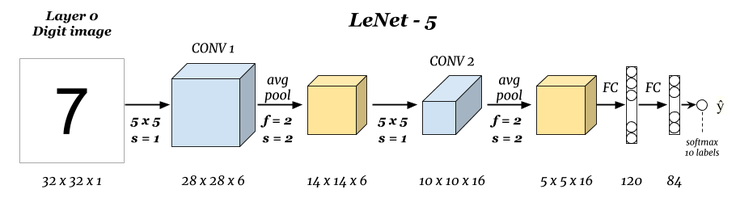

61.3. LeNet-5 Architecture#

Previously, we have implemented the LeNet-5 convolutional neural network using a total of 4 methods with 2 frameworks. I believe you are already very familiar with its architecture.

In fact, in the experiment of learning the high-level API of TensorFlow, we also introduced TensorFlow Estimator. Estimator has many advantages, especially in the production environment deployment. The example codes provided by many high-quality papers are also distributed through Estimator.

Therefore, in this challenge, you will independently try to customize an Estimator for the LeNet-5 network using TensorFlow and complete the training. Since only the predefined Estimators provided by TensorFlow were learned in the previous experiments, some materials on customizing Estimators will be provided for you to study in this challenge.

Exercise 61.1

Open-ended Challenge

Challenge: Build an Estimator with the LeNet-5 architecture using TensorFlow and complete model training and evaluation.

Specification: The neural network architecture must be exactly the same as LeNet-5. You can freely define the loss function, optimization method, and other hyperparameters. The challenge will provide the MNIST data and the preprocessing process.

First, we load the required MNIST data.

import numpy as np

import tensorflow as tf

# 从课程镜像服务器下载 MNIST NumPy 数据

DATA_URL = "https://cdn.aibydoing.com/aibydoing/files/mnist.npz"

path = tf.keras.utils.get_file("mnist.npz", DATA_URL)

with np.load(path) as data:

# 将 28x28 图像 Padding 至 32x32

x_train = np.pad(

data["x_train"].reshape([-1, 28, 28, 1]),

((0, 0), (2, 2), (2, 2), (0, 0)),

"constant",

)

y_train = data["y_train"]

x_test = np.pad(

data["x_test"].reshape([-1, 28, 28, 1]),

((0, 0), (2, 2), (2, 2), (0, 0)),

"constant",

)

y_test = data["y_test"]

x_train.shape, y_train.shape, x_test.shape, y_test.shape

((60000, 32, 32, 1), (60000,), (10000, 32, 32, 1), (10000,))

Solution to Exercise 61.1

import tensorflow as tf

def lenet_fn(features, labels, mode):

# Convolutional layer, 6 5x5 convolutional kernels, stride 1, relu activation, input_shape needs to be specified for the first layer

conv1 = tf.keras.layers.Conv2D(filters=6,

kernel_size=(5, 5),

strides=(1, 1),

activation='relu', input_shape=(32, 32, 1))(features["x"])

# Average pooling, default pooling window is 2

pool1 = tf.keras.layers.AveragePooling2D(

pool_size=(2, 2), strides=2)(conv1)

# Convolutional layer, 16 5x5 convolutional kernels, stride 1, relu activation

conv2 = tf.keras.layers.Conv2D(filters=16, kernel_size=(

5, 5), strides=(1, 1), activation='relu')(pool1)

# Average pooling, default pooling window is 2

pool2 = tf.keras.layers.AveragePooling2D(

pool_size=(2, 2), strides=2)(conv2)

# Needs to be flattened before connecting to the fully connected layer

flatten = tf.keras.layers.Flatten()(pool2)

# Fully connected layer, output is 120, relu activation

fc1 = tf.keras.layers.Dense(units=120, activation='relu')(flatten)

# Fully connected layer, output is 84, relu activation

fc2 = tf.keras.layers.Dense(units=84, activation='relu')(fc1)

# Fully connected layer, output is 10, Softmax activation

logits = tf.keras.layers.Dense(units=10, activation='softmax')(fc2)

# Calculate the loss

loss = tf.losses.sparse_softmax_cross_entropy(labels=labels, logits=logits)

# Training mode

if mode == tf.estimator.ModeKeys.TRAIN:

optimizer = tf.train.AdamOptimizer(learning_rate=0.001)

train_op = optimizer.minimize(

loss=loss, global_step=tf.train.get_global_step())

return tf.estimator.EstimatorSpec(mode=mode, loss=loss, train_op=train_op)

# Evaluation mode

if mode == tf.estimator.ModeKeys.EVAL:

eval_metric_ops = {

"accuracy": tf.metrics.accuracy(

labels=labels, predictions=tf.argmax(input=logits, axis=1))}

return tf.estimator.EstimatorSpec(mode=mode, loss=loss, eval_metric_ops=eval_metric_ops)

Above, we have expanded the original \(28 \times 28 \times 1\) NumPy array to a size of \(32 \times 32 \times 1\). Next, please participate in the TensorFlow official guide and teach yourself the content from the Keras model to the Estimator model. Based on the Keras LeNet-5 model built earlier in the course, convert it into an Estimator model and complete the training.

Reference learning materials