55. TensorFlow Fashion Item Classification#

55.1. Introduction#

In this challenge, you will independently complete an open classification prediction exercise. You need to use the Fashion-MNIST fashion item dataset and build a reasonable DNN network through TensorFlow Keras.

55.2. Key Points#

Building neural networks with Keras

Normalization of grayscale data

Flatten and Dropout layers

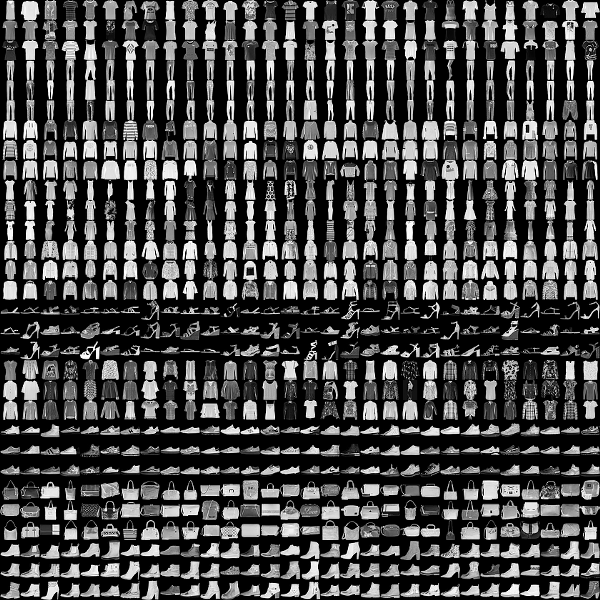

The Fashion-MNIST fashion item dataset contains 70,000 images, including 60,000 grayscale images of 28x28 pixels for the training set and 10,000 images of the same specification for the test set, with a total of 10 fashion item labels.

Category |

Description |

Chinese |

|---|---|---|

0 |

T-shirt/top |

T-shirt/top |

1 |

Trouser |

Trouser |

2 |

Pullover |

Pullover |

3 |

Dress |

Dress |

4 |

Coat |

Coat |

5 |

Sandal |

Sandal |

6 |

Shirt |

Shirt |

7 |

Sneaker |

Sneaker |

8 |

Bag |

Bag |

9 |

Ankle boot |

Ankle boot |

Next, we directly load this dataset using TensorFlow.

import tensorflow as tf

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

# 对特征进行标准化处理

X_train = X_train / 255

X_test = X_test / 255

X_train.shape, X_test.shape, y_train.shape, y_test.shape

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz

29515/29515 [==============================] - 0s 7us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz

26421880/26421880 [==============================] - 2s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz

5148/5148 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz

4422102/4422102 [==============================] - 1s 0us/step

((60000, 28, 28), (10000, 28, 28), (60000,), (10000,))

After reading the data, since it is grayscale images, we can

directly normalize it by dividing by 255. In addition, we

can also use methods such as

tf.keras.utils.normalize

provided by TensorFlow to normalize the data. After that, we

visualize the first sample of the training set to check:

from matplotlib import pyplot as plt

%matplotlib inline

plt.imshow(X_train[0], cmap=plt.cm.gray)

plt.title(y_train[0])

Next, we are going to build a DNN network with two hidden layers to complete image classification.

Exercise 55.1

Open Challenge

Challenge: Use TensorFlow Keras to build a fully-connected artificial neural network (ANN) with two hidden layers for fashion item classification.

Requirements: You are free to choose to build using the Keras sequential model or the functional model. You can freely define the neural network structure, loss function, optimization method, etc.

We recommend using the following network, and you can also customize and modify it.

The layers used in the recommended network above are:

-

tf.keras.layers.Flatten: Used to flatten the original input matrix of \(28 \times 28\) into a row vector. 🔗 -

tf.keras.layers.Dropout: In the fully-connected layer, neurons are directly disconnected with a certain probability to prevent overfitting. 🔗

You need to learn these two layers through the official documentation and learn to complete the construction and training of the network by combining the knowledge from the previous experiments.

Solution to Exercise 55.1

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28, 28)))

model.add(tf.keras.layers.Dense(units=512, activation='relu'))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.Dense(units=128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.Dense(units=10, activation='softmax'))

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train, y_train, batch_size=64, epochs=5,

validation_data=(X_test, y_test))

Finally, the challenge expects to obtain the training set accuracy and test set accuracy results under a reasonable number of iterations, preferably close to 90% or higher. An example is as follows:

Expected output

Train on 60000 samples, validate on 10000 samples

Epoch 1/5

60000/60000 [==============================] - loss: 0.3098 - acc: 0.8856 - val_loss: 0.3455 - val_acc: 0.8776

Epoch 2/5

60000/60000 [==============================] - loss: 0.2981 - acc: 0.8891 - val_loss: 0.3352 - val_acc: 0.8784

Epoch 3/5

60000/60000 [==============================] - loss: 0.2885 - acc: 0.8914 - val_loss: 0.3346 - val_acc: 0.8741

Epoch 4/5

60000/60000 [==============================] - loss: 0.2802 - acc: 0.8942 - val_loss: 0.3349 - val_acc: 0.8808

Epoch 5/5

60000/60000 [==============================] - loss: 0.2738 - acc: 0.8982 - val_loss: 0.3197 - val_acc: 0.8851

Related links