47. Overview and Examples of Deep Learning#

47.1. Introduction#

Deep learning is an algorithm for representing learning of data with an artificial neural network architecture. In this experiment, we will understand the concepts and characteristics of deep learning, as well as the differences and connections between deep learning and machine learning.

47.2. Key Points#

Introduction to Machine Learning

Introduction to Deep Learning

Development of Deep Learning

47.3. Introduction to Machine Learning#

Before formally understanding deep learning, it is necessary to first introduce its broader category: machine learning.

Machine Learning is a branch of artificial intelligence. Its core consists of machine learning algorithms, and it improves its own performance by obtaining experience from data. Machine learning has been around for a long time, but with the rapid development of computer technology and related fields in recent years, machine learning has become popular again.

Want to know what machine learning is? Let’s start with the definition of machine learning. Among them, a very classic definition comes from the monograph Machine Learning published by computer scientist TOM M. Mitchell in 1997. The original text of this sentence is as follows:

{note}

A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P if its performance at tasks in T, as measured by P, improves with experience E.

For a certain class of tasks T and performance measure P, if the performance of a computer program on T, measured by P, improves itself with experience E, then we say that this computer program is learning from experience E.

You may find the above definition too academic and even fail to understand its exact meaning after reading it several times. Simply put, this sentence emphasizes “learning”, and the core meaning is that computer programs improve their performance by accumulating experience.

Among them, the core of the computer program is what we call the “machine learning algorithm”, and the machine learning algorithm comes from basic mathematical theories and methods. With an algorithm that can learn autonomously, the program can automatically analyze and obtain patterns from the training data, and use the patterns to predict unknown data.

47.4. Machine Learning & Deep Learning & Artificial Intelligence#

We often see three different terms, machine learning, deep learning, and artificial intelligence, in media reports and academic materials, but we often can’t figure out the relationship between them. Are they inclusive, intersecting, or completely independent?

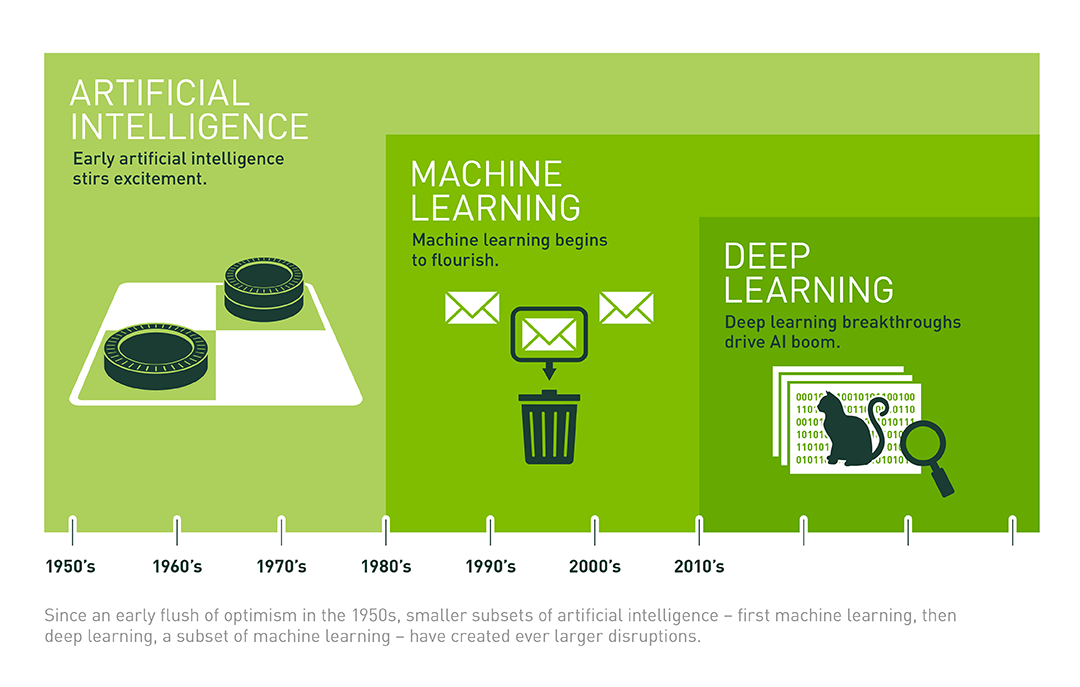

Here, we quote some viewpoints from the article of senior technology journalist Michael Copeland for explanation. Among the three, the first concept to emerge was artificial intelligence, which was proposed by John McCarthy in 1956. At that time, people were eager to design a “machine that could perform tasks with human-intelligent characteristics”.

After that, researchers conceived the concept of machine learning, and the core of machine learning is to seek ways to achieve artificial intelligence. Thus, many machine learning methods such as Naive Bayes, decision tree learning, and artificial neural networks emerged. Among them, the artificial neural network (ANN) is an algorithm that simulates the biological structure of the brain.

Later still, deep learning emerged. The key to deep learning lies in building deep neural networks with more neurons and more layers. We found that the learning effect of this kind of deep neural network even exceeds that of humans in aspects such as image recognition.

Therefore, regarding the above three concepts, a relationship diagram as shown below can be summarized. Among them, machine learning is a means to achieve artificial intelligence, and deep learning is just a specific method in machine learning.

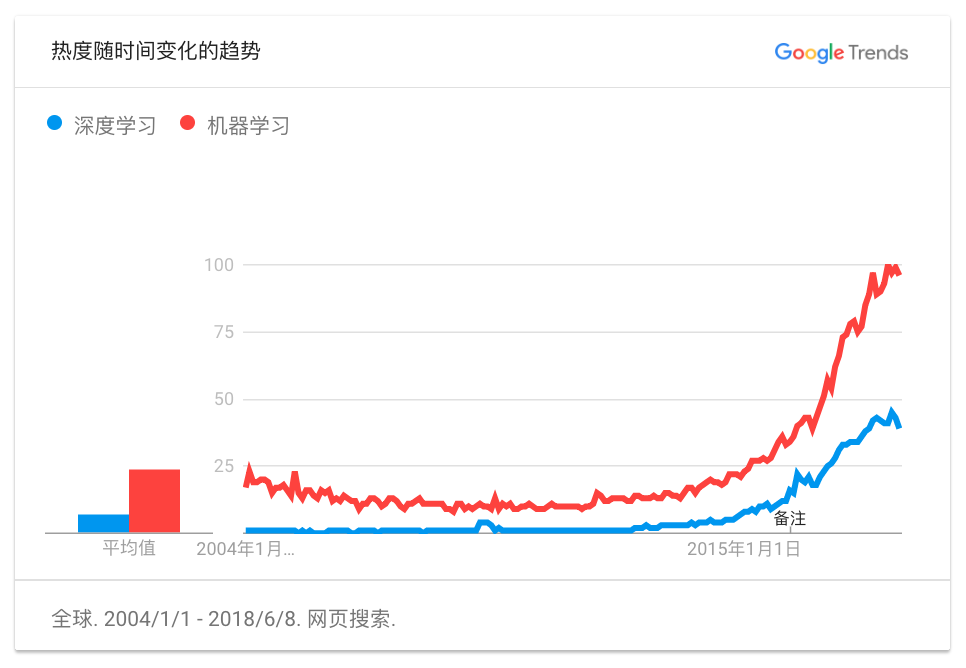

If you search for “Deep Learning” on Google Trends, you will see the changes in the popularity of deep learning and machine learning. Especially in the past five years, both have shown explosive growth, with the popularity increasing by dozens of times.

I believe many people got to know about deep learning starting from AlphaGo. Indeed, AlphaGo inspired the public’s awareness of this technology and simultaneously promoted the rapid development of deep learning. In fact, deep learning is not a concept that emerged out of nowhere. Let’s review its history.

47.5. The Development History of Deep Learning#

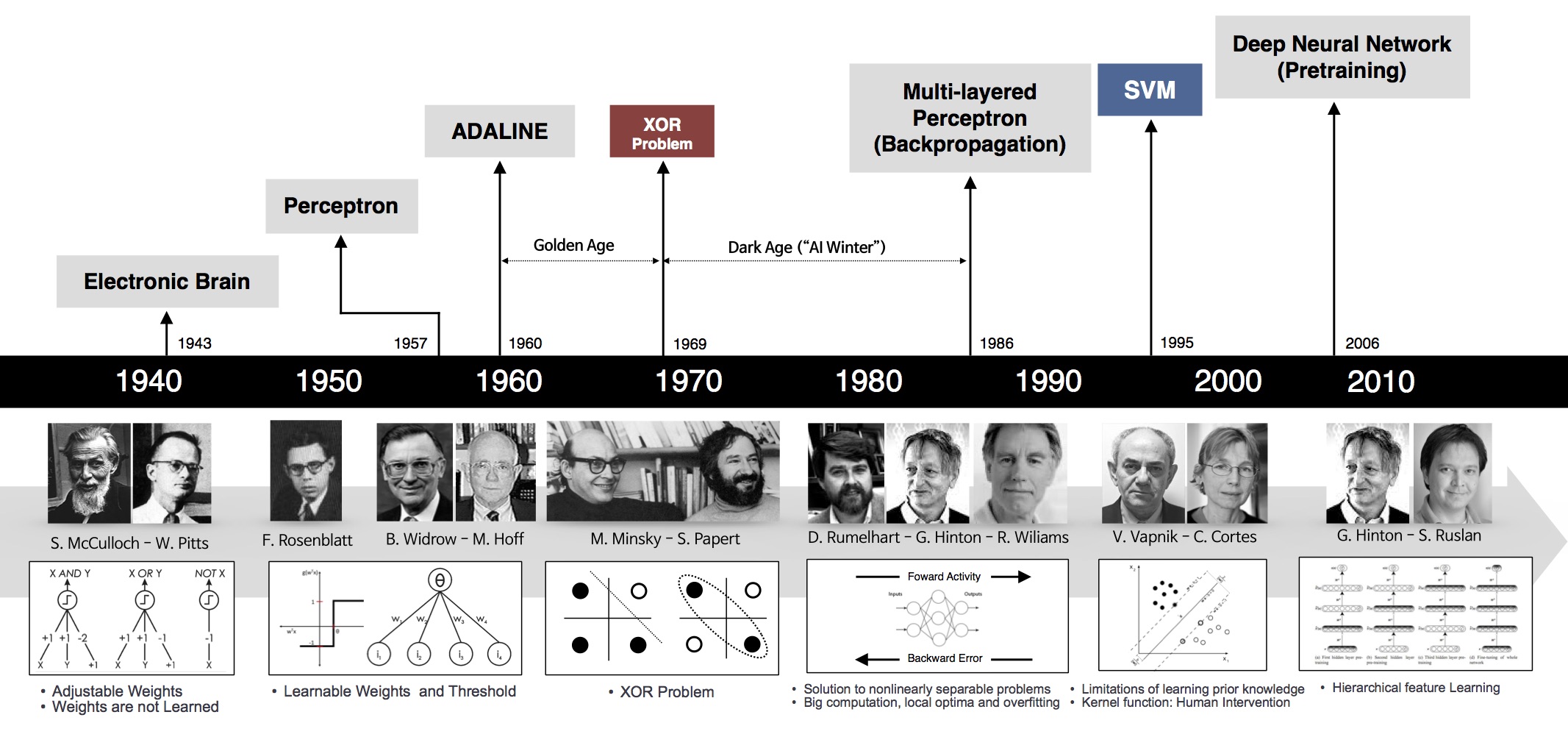

The development history of deep learning is actually the development history of artificial neural networks. The following figure presents a chronology of the development of artificial neural networks from 1940 to 2000.

Deep learning mainly uses deep neural networks. Looking back at the earliest neural networks, it can be traced back to 1943. In this year, the famous neuroscientist Warren S. McCulloch and Walter Pitts attempted to simulate neuron signals in a Turing machine.

The next important milestone was in 1957 when psychologist Frank Rosenblatt invented the perceptron algorithm. At that time, Frank Rosenblatt was working at the Cornell Aeronautical Laboratory. He attempted to construct a feedforward artificial neural network structure to perform binary linear classification tasks, which was also the first time neural networks were used for classification tasks.

In 1969, a study by Marvin Minsky and Seymour Papert showed that perceptrons could not handle the exclusive OR problem, and that computers did not have enough power to handle the long computation times required for large neural networks. As a result, the research progress of neural networks stalled.

Fortunately, in 1975, the backpropagation algorithm emerged, which effectively solved the exclusive OR problem and, more importantly, the problem of how to train multi-layer neural networks. In 1986, D. Rumelhart et al. gave a comprehensive exposition of this algorithm. The emergence of the backpropagation algorithm is an important milestone in the development of neural networks and is still used in current deep neural networks.

Later, other simpler methods such as support vector machines emerged, diverting everyone’s attention. After 2000, the emergence of deep neural networks once again sparked enthusiasm for artificial neural networks.

Of course, the above development history can only be said to be the main line. In addition, the Neocognitron that emerged in 1979 can be regarded as the originator of convolutional neural networks. LeNet, which appeared in 1986, became the first complete convolutional neural network. AlexNet’s victory in the 2012 ImageNet Large Scale Visual Recognition Challenge shocked the world. Subsequently, VGG and the current ResNet with sufficient depth and scale emerged one after another. Of course, all of the above mentioned are convolutional neural networks.

Recurrent neural networks and generative adversarial networks are both developing rapidly. It can be said that the current era is the spring of artificial neural networks. We have sufficient computing power to complete the training of large-scale deep neural networks and sufficient theoretical support to keep moving forward.

In the following course content, we will start with linear regression, understand the working mechanisms of perceptrons and artificial neural networks, and then learn how to build more complex deep neural networks.

Deep learning frameworks have significantly reduced the cost and time of implementing deep neural networks. Currently, there are quite a few mainstream deep learning frameworks on the market. We have chosen TensorFlow, which is mainly developed by Google, and PyTorch, which is mainly developed by Facebook, for in-depth study.

After learning the basic usage of relevant frameworks, the experiment will provide in-depth explanations of convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs). In this process, you will not only learn the theoretical knowledge of relevant network structures but also continue to familiarize yourself with the usage of modules applied in open-source frameworks. At the end of the course, more deep learning engineering practice techniques will be introduced, including automated deep learning, training and deployment of deep learning models, etc.

47.6. Summary#

This experiment provides a brief introduction to deep learning and reviews the development history of deep learning. Deep learning is a subset of machine learning. If you already have knowledge reserves related to machine learning, it will be very helpful for completing this course.

Related Links